Windows Azure Planning – A Post-decision Guide to Integrate Windows Azure in Your Environment

October 15, 2011

Once an organization has made the decision to adopt Windows Azure, it is faced with a set of planning challenges, beginning with the basic, “Who should be responsible for signing up and managing the Windows Azure account?” to more involved, “How will Windows Azure impact the ALM process, availability, operational costs?” In this guide, we will seek to answer some of these questions.

1. Plan for Administration

Billing

A Windows Azure subscription can be setup by creating a Microsoft Online Services account via the Microsoft Online Services Portal. Before you create a subscription, you must decide on a billing method. Unless you buy resources in bulk ahead of time, providing a credit card to pay for monthly services has been the only available option until recently. While the credit card approach works for individuals and smaller organizations, finance departments at larger organizations are simply not structured to support this billing model. Recognizing this as a potential problem, Microsoft has recently initiated an invoice billing model.

Administrators

At least two individuals in your organization should be given Co-Administrator rights to your service. That said, the number of Co-Administrators should be carefully controlled as people in those roles have full access to add, modify, and delete services. Note that adding a user to the Co-Administrator role will accord them administrative privileges across Windows Azure Compute, Storage and SQL Azure services (Windows Azure AppFabric service is not integrated at the time of writing).

Subscriptions

You should determine the number of subscriptions that will be needed. Each subscription is associated with an Azure datacenter and comes with a quota of resources – storage (100 TB) and compute (20 cores). A subscription can contain up to six hosted services (applications) and 5 storage accounts by default which can be increased if needed by contacting Windows Azure support. For example, an organization may choose from among two distinct services to host in Windows Azure and two environments – production and test – for a given application. Many organizations will start with one subscription but may want to add other subscriptions later. Thus, it is important to plan meaningful names for subscriptions. One other consideration when planning subscription(s) is that storage within a subscription can only be shared by services contained within a subscription. For example when deploying a service, the package file can be deployed from an Azure storage account that is part of the same subscription as the service.

Certificate Management

Windows Azure relies on X.509.v3 certificates for authentication. There are two types of certificates used by Windows Azure:

Management Certificates are associated with a subscription and, as the name suggests, are utilized when performing management operations against it. Typical clients include Windows Azure Tools for Visual Studio, command line tools, such as CSUpload.exe, and custom programs leveraging the Windows Azure Service Management REST API. Management certificates only contain the public key (.cer file). Clients connecting to the subscription need to be trusted and should have the private key.

Service Certificates are associated with a service and are used by Windows Azure services to enable secure interaction with its users. For example, a Windows Azure service that hosts a HTTPS based web endpoint will need a service certificate. Another example is a WCF based Windows Azure Service that requires mutual authentication. Unlike the management certificate, service certificates are private key (.pfx ) files. Service certificates associated with a service are analogous to certificates in a certificate store in on Windows. In fact, Windows Azure copies the service certificates to each role (Virtual Machine) instance associated with a service.

Here are some of the considerations for managing Windows Azure certificates:

· There can be a maximum of 10 management certificates associated with a subscription per administrator. So if there is a need to associate more than 10 certificates, additional administrators would need to be added to a subscription. Note that administrators can share certificates.

· There is no role-based access to the portal. All administrators have the same level of access. This is why it is important to keep the setup simpler by limiting the number of administrators and distinct management certificates.

· Follow industry best practices to secure certificates. If, for whatever reason, there is a need for large number of certificates, consider the use of a third-party tool to manage the certificates.

· Management and service certificates can be self-issued or trusted certificates. Self-issued certificates will cause a security warning that will need to be explicitly bypassed. This is more likely an issue for service certificates that are used to secure HTTPS endpoints.

· Service certificates can be managed by multiple individuals. For example, developers typically work with self-signed certificates. At a later time, IT pros can replace self-signed certificates with trusted ones without having to redeploy the service. This is possible because Windows Azure references the certificate (store name and location) as a logical name stored in the service definition file. This way a certificate can be changed by simply uploading a new service certificate and changing the certificate thumbprint in the service configuration file.

Operations

The Windows Azure portal provides unified access to various operational tasks such as creating subscriptions, creating storage accounts, deploying services, etc. At the same time, most administrators prefer a repeatable, automated way of performing these tasks. Fortunately, the Windows Azure Platform PowerShell Cmdlets can make this task easier. These Cmdlets fall into four categories:

· Hosted services, affinity groups and role instance management, including functions like adding a new hosted service, getting an instance status, adding an affinity group, etc.

· Automation of SQL Azure deployments, including creating and removing a SQL Azure server, adding a firewall rule, and setting/ resetting a SQL Azure password

· Windows Azure Diagnostics Management, including downloading specified performance counters, and clearing Windows logs from storage.

· Windows Azure Storage including adding a new storage account, clearing a container, and getting and setting storage analytics.

For more information please refer to http://michaelwasham.com/2011/09/16/announcing-the-release-of-windows-azure-platform-powershell-cmdlets-2-0/ & http://msdn.microsoft.com/en-us/library/gg433135.aspx

Affinity Groups

When creating a Windows Azure service, you can either specify the datacenter location or specify that the services you create should be part of an affinity group. Affinity groups are logical containers for associating services and storage accounts to a data center. The advantage of having all your services in same affinity group is low latency between these services. Once an affinity group has been defined at a subscription level, it becomes available to any subsequent services or storage accounts. It is recommended that administrators first create an affinity group before provisioning services and storage accounts.

Note that currently the affinity groups do not apply to SQL Azure and Windows Azure AppFabric. You will need to ensure that you create an instance of these services in the datacenter to which the affinity group is associated.

2. Plan for ALM

David Chappell, in his ALM whitepaper, divides Application Lifecycle Management (ALM) into three distinct areas: governance, development and operations (as shown in the screenshot below).

Pasted from http://go.microsoft.com/?linkid=9743693

For ALM to be effective, an organization needs a common foundation (depicted in screenshot below) for version control, build management, test case management, reporting, and other functions. Having a common foundation makes it easier to share artifacts between teams. Additionally, having a consistent foundation makes it possible to share processes for build and deployment, thereby reducing overall costs.

Pasted from http://go.microsoft.com/?linkid=9743693

The governance aspects of ALM remain largely the same for Windows Azure based projects. Teams can continue to use tools in place for use case development, project management, and application portfolio management.

Since Microsoft Team Foundation Server (TFS) is a common choice for Windows Azure based projects, we will use it for our discussion here – although this discussion is applicable to other ALM tools such as IBM Rational Concert. Development aspect of ALM will be impacted by Window Azure in the following ways:

· Unlike traditional projects, most of the initial development will take place inside the cloud emulator built into Visual Studio. From time to time, developers will need to develop directly against Windows Azure. This is mainly due to the differences in behavior between the emulator and Windows Azure. For example, the code running in the emulator runs under the administrative privileges and has access to GAC. This is not available to code running in Windows Azure. Another example would be Azure AppFabric services. There are no emulator versions of these services. For a complete list of differences between the emulator and Windows Azure please refer to http://msdn.microsoft.com/en-us/library/gg432960.aspx

· Starting with version 1.4 of Windows Azure Tools for Visual Studio, it is possible to create separate target files for different environments. By default, it creates a local and a cloud target environment. It is possible to create a custom target configuration such as “Production” or “Test”. This feature makes it easy for developers to test against the emulator and Windows Azure hosted service.

· The test team will primarily be testing against Windows Azure hosted services. This means that build definitions (including compilation, code analysis, smoke tests and deployment steps) need to be modified to target a Windows Azure based test environment. Fortunately, codeplex tools such DeploytoAzure, provide custom workflow actions for extending the build process. Another approach would be to use Azure Powershell Cmdlets to modify the build definition.

· Windows Azure service deployments are conducted by copying the service package and configuration files to Azure Blob storage first (unless the deployments are being done manually via the Azure portal). So you would want to copy the build output to a Azure Blob container (described in the previous step). As an aside, the elastic nature of Azure storage makes it a great place to archive build outputs. Please refer to Joel Forman’s post on Building and Deploying Windows Azure Projects using MSBuild.

· Windows Azure provides production and staging environments to create a service deployment. Typically, a service is deployed to the staging environment to test it before deploying it to the production environment. A service in staging can be promoted to the production environment by a simple swapping process, without the need for redeployment. For details on swapping, please refer to MSDN article. The swapping feature can be used to deploy changes or to rollback to a previous version of the service without interrupting the service.

· Tom Hollander is his blog post provides an example of a typical Windows Azure setup:

Pasted from http://blogs.msdn.com/b/tomholl/archive/2011/09/28/environments-for-windows-azure-development.aspx

· The elastic nature of Windows Azure makes it suitable for generating load for a stress test. One such test setup is proposed in a series of blog posts by ricardo. As shown below, Agents run on Windows Azure, thereby making it possible to quickly load on the system. The test controller resides on-premise and uses Windows Azure Connect to communicate with the controllers.

Pasted from <http://blogs.msdn.com/b/ricardo/archive/2011/04/08/load-testing-with-agents-running-on-windows-azure-part-1.aspx>

3. Plan for Availability

Architectural Considerations

While detailed discussion on architectural best practices is out of scope for this guide, it is important to note that availability begins with a good design. Every aspect of your Windows Azure Service should take advantage of scale-out features available on the platform. Let us refer to the following architectural diagram in order to review of some key scalability aspects:

· Load Balancer. Each service is assigned a single Virtual IP (VIP) for all role instances. VIP is managed by the load balancer. Load balancer uses a simply round-robin approach for routing incoming requests. This means that each role instance has to be stateless. Any role instances that become non-responsive for any reason are taken out of the load balancer automatically, and Windows Azure will start the process of healing the service by adding another role instance in due course. This is why it is important to have at least two role instances to maintain availability (in fact having two role instances is a requirement to qualify for the SLA). The same principle of availability and self-healing applies to the worker roles shown in the diagram below. In addition to having multiple role instances, it is also important to logically split the role instances into fault and upgrade domains. Please refer to Improving Application Availability in Windows Azure for more information.

· Partitioning of the Azure Storage Queues. The Azure Storage Queues are used to communicate between Web and Worker roles. For availability and scalability reasons, it is important to consider partitioning the Azure queue.

· Storage. Azure storage and SQL Azure are designed to be fault tolerant. Each manages multiple replicas of data behind the covers and can failover to a different replica, as needed. That said, it is important to partition your storage appropriately to not only improve the availability but also throughput. Please refer to Windows Azure Storage Scalability Targets for more information.

· Intelligent Retry Logic. It is important to design your code to handle failure conditions and temporary unavailability by baking in retry logic when interacting with SQL Azure, Azure storage, etc. Transient Fault Handling Framework developed by Customer Advisory team has built a reusable set of components for adding intelligent retry logic into applications.

· Application Developer Responsibilities. Finally, it is important to know of instances where the platform does not provide high availability out of the box. For example, it is the responsibility of the application developer to make inter-role communication fault tolerant.

Global Availability

In the previous section, we reviewed some design considerations for making Windows Azure services more available. However, all of those considerations were limited to services deployed within one datacenter. No matter how available a service is within a data center, it does no good if the there is a data center outage. If the business requirements dictate accounting for such a contingency, there are additional considerations that apply. In the next section we will discuss design considerations for making your application available globally – across different Windows Azure datacenters.

· Azure Traffic Manager. We already know that in order for an Azure service to be globally available, it must be deployed to more than one data center. However, we also know that each instance of the service gets its own VIP. So how are the customers to know which VIP address to use, especially when, there is a contingency and service has to be failed over to another datacenter? This is where Azure Traffic Manager comes in. It allows customers to balance traffic to service hosted across multiple datacenters. Note that load balancing may be triggered due to load on the system or a failure condition. The following diagram explains at a high level how Traffic Manager works. Based on the defined policy, foo.cloudapp.net can be directed to either foo-us.cloudapp.net or foo-europe.cloudapp.net.

· Deployment Strategies. The ability to route to a different datacenter is great, but what about the data. How will data be synchronized across datacenters? How will Access Control Service or Service Bus endpoints be accounted for? The following table (pasted from David Aiken’s presentation ) provides a summary of deployment strategies that make Windows Azure service globally available. Strategies included in this table are combination of Windows Azure features and custom code. For example, SQL Azure Data Sync Service can be used to synchronize the SQL Azure database instances. Windows Azure Storage has geo-replication (within a region) enabled by default. However, note that geo-replicated data is not directly accessible to services. It is designed for handle data center outages. Service Bus and Access Control Service are not currently covered by Traffic Manager. This means that in order to achieve global availability, separate namespaces will need to be created.

|

Windows Azure Service |

Multi-datacenter deployment strategy |

|

Windows Azure Compute |

Create multiple deployments – user traffic manager to route traffic |

|

Windows Azure Storage |

Geo-replication is turned on by default |

|

SQL Azure |

Use SQL Azure Data Sync Service |

|

Reporting Services |

Deploy reports to different locations |

|

Service Bus |

Create multiple namespaces |

|

Access Control Service |

Create multiple namespaces |

Service Updates

There are three options for updating a deployed Windows Azure Service.

o Delete and redeploy. Given that the previous service instance is deleted, this option offers maximum flexibility with the updates that can be applied. On the flip side this option is most disruptive because it incurs service downtime.

o Deploy to staging followed by a VIP Swap. This option involves deploying an updated version of the service to staging and then performing a VIP swap. There is no downtime incurred in this option, however, existing connections are not moved over to the newly promoted instance. The types of updates that can be applied via this option are limited as well. Additionally there is an additional cost associated with standing up the staging environment.

o In-place or rolling upgrade. This option involves deploying the updates to a service one upgrade domain at time. (It is recommended that each service have at least two upgrade domains defined.) Only instances associated with the upgrade domain are stopped, while other instances keep running.

The following table depicts the kinds of updates that can be applied via each of the three upgrade options discussed above.

|

Changes permitted |

In-place |

VIP Swap |

Delete and re-deploy |

|

Operating system version |

Yes |

Yes |

Yes |

|

.NET trust level |

No |

Yes |

Yes |

|

Virtual machine size |

No |

Yes |

Yes |

|

Local storage settings |

No |

Yes |

Yes |

|

Number of roles for a service |

No |

Yes |

Yes |

|

Number of instances of a particular role |

Yes |

Yes |

Yes |

|

Number or type of endpoints for a service |

No |

No |

Yes |

|

Names and values of configuration settings |

Yes |

Yes |

Yes |

|

Values (but not names) of configuration settings |

Yes |

Yes |

Yes |

|

Add new certificates |

Yes |

Yes |

Yes |

|

Change existing certificates |

Yes |

Yes |

Yes |

|

Deploy new code |

Yes |

Yes |

Yes |

The above table is pasted from http://msdn.microsoft.com/en-us/library/ff966479.aspx

For recommendations on improving availability during the upgrade process refer to this MSDN page.

Diagnostics

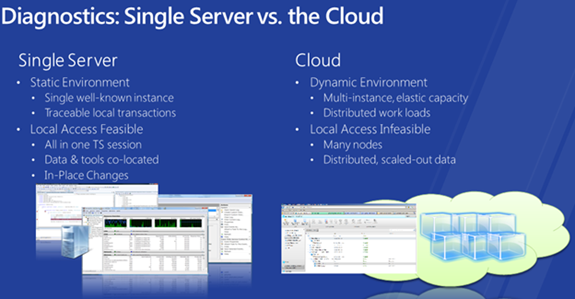

Designing for availability does not preclude the need to collect diagnostics. In fact, as shown in the diagram below, the need for collecting diagnostics is even greater in the cloud.

Fortunately, Windows Azure Diagnostics enables you to collect diagnostic data from an application running in Windows Azure and store it in Windows Azure storage. The following diagram depicts the overall architecture of how diagnostic data is collected. Each role has a diagnostic monitor process that collects the collects the data requested by the user. This includes Windows Event Logs, Performance Counters, crash dumps, and even custom file-based logs. The diagnostic data is written to the local disk within the role instance. At a pre-determined frequency, the diagnostic data is transferred to Windows Azure Storage. Please refer to this MSDN link for more on information on collecting diagnostic data.

There are a number on open source and third-party tools that can simplify the collection and analysis of diagnostic data. These include Azure MMC and Cerebrata’s Azure Diagnostic Manager.

Organizations that rely on Operations Manager for service management of their Windows environment can add the Monitoring pack for Windows Azure Applications. This pack allows for collecting performance information, Windows events. .NET framework trace data from the Windows Azure storage account. For more information please refer to this download page.

Monitoring

Diagnostics can help monitor the internal health of a Windows Azure service, but they do not tell you if the application is visible to the users. This is where monitoring comes in. These applications can monitor the health of your Windows Azure applications and initiate alerts and notifications in the event of an emergency. Again, organizations using Operations Manager today can use the Azure pack to monitor Windows Azure services as well. In addition, there are a number of third-party providers such as Cumulux’s ManageAxis that can provide similar capability.

4. Plan for Storage

Windows Azure offers a number of different storage options that are elastic and highly available.

Consistent with the theme of this paper, we will focus on how each storage option impacts the existing IT setup. (A discussion on the applicability of each option (SQL vs NoSQL) is outside the scope for this section.) Additionally, we discuss the differences in cost in a later section.

There are two storage solutions available on the Windows Azure platform – SQL Azure and Azure Storage.

SQL Azure

SQL Azure offers a familiar relational capability. However, there are some key differences as compared to an on-premise SQL Server. Let us look at how these differences will impact planning and administration of SQL Azure databases.

· When a SQL Azure subscription is created it results in creation of a master database and server-level principal (similar to the sa role on -premise). The master database keeps track of all the databases and logins that are provisioned. Even though the databases are physically located on different machines, the SQL Azure server provides an abstraction that groups all the SQL Azure databases. For additional information on SQL Azure administration refer to this MSDN page.

· Database administrators will continue to manage schema creation, index tuning, and security administration. However, being a multi-tenant service, SQL Azure abstracts the physical aspects of database setup and administration. For instance, it is not possible to specify a file group where the database or index will reside.

· SQL Azure databases are designed to be fault tolerant within a datacenter, so there is no need for a custom clustering or mirroring setup. However, as discussed earlier, in order to make the SQL Azure database globally available, multiple copies of databases will need to be provisioned across different datacenters. These database copies can then be synchronized using the SQL Azure DataSync service. As you would imagine, the synchronization across different datacenters is not atomic. The inherent latency involved in synchronization can result in loss of data and needs to be accounted for in the design of the application.

· On-premise tools such as SQL Server 2008 R2 SSMS can be used to manage the SQL Azure instances. In addition, third-party tools such as Redgate support SQL Azure as well. For light weight operations against SQL Azure databases, Microsoft provides a Silverlight based tool via the SQL Azure portal.

· SQL Azure is accessible via TCP port 1443. This means that to access SQL Azure databases you will need to ensure that firewall rules allow outgoing traffic on port 1443.

· As discussed, given that physical aspects of a SQL Azure database is not accessible, SQL backup and restore commands will not work. Fortunately, SQL Azure offers a capability to backup and restore databases to Azure Blob storage. There are third-party solutions available as well.

· SQL Azure Administrators and developers need to take into account the transient nature of connections. As stated, SQL Azure databases reside in a multi-tenant setup. In order to prevent one tenant from starving other tenants of resources, database connections can be throttled, resulting in termination or temporary unavailability. This is why it is important incorporate retry logic when accessing the database. Frameworks such as Transient Fault Handling Framework can make this task easier.

· Currently, there is a 50 GB limit on a single instance of the SQL Azure database. In order to deal with this limitation, applications are expected to “shard” (or partition) the data across multiple databases. Today the partitioning logic is owned by the application. A forthcoming feature of SQL Azure, referred to as federation, will allow the partitioning logic to be managed outside the application – by database administrators. For example, if the distribution of data changes across the partitions changes over time, the federation feature will for allow splitting and merging of data without effecting the availability or consistency of data.

Windows Azure Storage

Azure storage offers three different kinds of storage options – Tables, Blobs and Queues. Azure Storage is designed for durability and scalability. For more information on Windows Azure Storage, please refer to this MSDN page.

Here are some planning considerations for Windows Azure Storage:

· Windows Azure Tables. Windows Azure Tables is good choice for storing data that will benefit from a “No-SQL” pattern – a massively large volume with low duty (in terms of query processing). Windows Azure Storage manages multiple replicas of the dataset to protect against hardware failures. However, for business continuity reasons it may be important to protect against errors in the application by maintaining snapshots of data. Blob storage has a built-in snapshot capability (while Windows Azure Table Storage does not). Tools such as AzureStorageBackup or lokad-snapshot rely on snapshot capability of blob storage to offer backup functionality. Cerebrata Azure Management Cmdlets can also be used to back up a storage account to local computer and restoring the contents of a storage account.

· Geo-replication. As stated previously, Geo-replication is enabled by default at no cost. However, note that geo-replication is a platform level feature that comes into play when there is a data center outage. Replicated data is not directly available to the applications. Furthermore Geo-replication feature is only available between data centers in the same region.

· Tools. There are a number of open source and commercial options available for managing Windows Azure Storage including tools from http://www.cerebrata.com/ and http://cloudberrylab.com/. In addition, Microsoft has made a sample available (including source code) to manage Windows Azure Storage. This sample can be found at http://wapmmc.codeplex.com/ .

· CDN. Consider enabling the CDN feature if the data stored in Windows Azure Blob is public data. For data that is not public, consider the use of shared access signatures.

· Windows Azure Blobs. As the name suggests, Windows Azure Blobs is designed for storing large binary objects. Blob storage is a great place to host streaming content. It supports efficient resume for browsers and streaming media players via Http headers such as Accept-Ranges. It is expected that smooth streaming for video content will be available in future.

· SMB. Page blobs can be mounted as a NTFS volume on a cloud or on-premise machine (a feature referred to as the X-Drive). Only a single instance can be allowed read-write access via X-drive. However, using SMB, it is possible to mount a drive on one role instance and then share that out to other instances. For more information refer to this blog post. The following diagram depicts how Windows Azure Drive works.

· Consider enabling Windows Azure Storage Metrics to better understand usage patterns. For example, it is possible to obtain hourly summary of number of requests, average latency and more for Blobs, Tables and Queues. For more information refer to this MSDN page

· Consider enabling Windows Azure Storage Logs to trace requests executed against storage accounts. For example, it is possible to capture the Client IP that issued a “Delete Container”. For more information refer to this MSDN page.

· Review the throughput guideline for Windows Azure Storage to make sure that the scalability needs can be met. Additionally, consider using tools such as Azure Throughput Analyzer that can help measure the upload and download throughput achievable from your on-premise.

Hybrid Solution – On Premise Data Store

There are scenarios where a cloud based data store is not viable. Here are some scenarios:

· Compliance (HIPPA or PCI DSS) concerns may prevent the data from being stored in the public cloud.

· Non-availability of features such as encryption in SQL Azure.

· Inability to undertake certain performance improvement steps such as changing the fill_factor or pad index or affect the disk I/o by moving to a RAID or another type of controller.

In such situations it may be advisable to host within SQL Server running on-premise. The connectivity to the on-premise instance of SQL Server will be via Windows Azure Connect. Windows Azure Connect provides IP-based network connectivity between on-premises and Windows Azure resources.

5. Plan for Compute

Windows Azure compute instances are where applications are hosted. The Windows Azure platform takes care of the operation constraints (administration, availability and scalability) so developers can focus on the applications. However, as you would imagine, some amount of planning / governance is needed to make the most of the compute service. Here are some key considerations:

· Compute instances come in different sizes. You will need to decide which instance meets your needs by reviewing the characteristics of each instance size.

· You will need to decide in which data center to provision the compute instances. As discussed, you need to consider location affinity, fault tolerance and global availability needs.

· There is a system based on heartbeats for monitoring the health of compute instance. If a hardware or software failure causes the compute instance to be down, it will be detected by the Windows Azure platform. (Refer to the diagram below.) The worst case reaction time is heartbeat interval + heartbeat timeout = ~ 10 minutes.

The above diagram was pasted from http://channel9.msdn.com/Events/TechEd/Europe/2010/COS326

· Profile your applications to determine if compute instances that you have spawned are causing a bottleneck.

· In order to dynamically scale your applications up and down, you will need to determine thresholds for dynamic scaling based on load and demand. The dynamic scaling threshold can be max queue length, % CPU utilization, etc.

· Once you have determined the dynamic scalability threshold, you can use tools such as AzureWatch, AzureScale or Cumulux to scale the instances appropriately.

· In addition to dynamic scaling, consider periods of day where application will witness low volumes of access. It may be possible to scale down and then up, based on the schedule.

· Note that each compute instance is a virtual server hosted on a shared piece of hardware. Given that compute instances are running inside multi-tenant environment, it is important to consider side-channel attack. For more information, refer to Microsoft Best Practices for Developing Secure Azure Applications.

6. Plan for Cost

With the “utility-like pay-per-use” pricing, Cloud computing promises to change the economics of running applications in fundamental ways. This is commonly referred to as Cloudonomics, a term Joe Weinman coined in his seminal article.

In this section, we will review some of the key considerations related to cost of hosting services in Windows Azure:

· You should understand the differences in costs between maintaining a traditional server, virtualized sever, private and public cloud. Please refer to Microsoft white paper for more information.

· It is important to understand the pricing for various Windows Azure Platform components. The easiest way is to start with the Windows Azure pricing calculator to model the costs of various components.

· In the application architecture section above, we reviewed a number of considerations to make the application more scalable and fault tolerant. Added scalability and fault tolerance comes at a cost. You need to make sure that business requirements warrant extra costs. One of the benefits of cloud computing is that many of these options (i.e. deploying service to additional datacenters, and adding a number of instances) need not be enabled upfront.

· Once you have built a Windows Azure Service, it is important to periodically review the monthly bill in conjunction with profile data for your application. This will allow you to eliminate services that are not being used. For example, if the compute instances are not taxed, you may want to scale down to a smaller number of instances. Similarly, if the pattern of usage warrants a lower number of instance during a certain part of day, automate the scaling to have lower number of instances during this time.

· Watch for runaway costs. Even though cost per transaction may be very small (i.e. 100,000 transactions against Windows Azure storage costs $.10) unchecked usage can run up costs quickly. For example, consider the following diagram – monitoring 5 performance counters every 5 second for 100 instances can cost you more than $260 a month.

Another example is Windows Azure Storage Analytics. Again, the cost of storage may be small per GB, but if left unchecked, the storage costs can mount over time. This is why it is important to declare a retention policy. When a retention policy is applied, Storage Analytics will automatically delete logging and metrics data older than the specified number of days. Note that disposition as a result of the retention policy is not billable.

· Use the billing period to your advantage. The compute instances are billed per hour. It makes no sense to shutdown and restart an instance for a 30 minute period of inactivity. You would end up paying for two compute hours. SQL Azure charges are pro-rated over a month of usage. If you are not using a database for a period of time, backup it to Blob storage and restore it when you need it.

· Be aware of common pitfalls such as the ones described below:

o You are paying for the service as soon as the role has been provisioned. This means that if your service recycles between ‘initializing’ / ’busy’ / ‘stopping states’ (perhaps due to an error in the deployment package), you are continuing to accrue charges.

o A service that is in a suspended state continues to incur charges.

o To avoid incurring any charges for a service that is not in use, delete that service.

7. Conclusion

In this blog post, we reviewed some of the planning considerations for getting started with Windows Azure. We discussed the following major areas: Administration, ALM, Availability, Storage, Compute and Cost. While not an exhaustive set, hopefully this post can help you plan your Windows Azure deployment.

Thanks to Harin Sandhoo, Kevin Griffie and Gaurav Mantri for their contributions to this blog post.

May 3, 2012 at 6:27 pm

Great Stuff, Vishwas!

Just ran across this and it’s quite good.

May 3, 2012 at 11:25 pm

Fantastic Article!! Kudos

November 2, 2012 at 2:33 pm

[…] Windows Azure Planning: A Post-Decision Guide to Integrate Windows Azure in Your Environment: AIS’ CTO Vishwas Lele posted a complete planning guide on how to best adopt and integrate Windows Azure into your organization. (Fleeting Thoughts) […]

November 9, 2012 at 12:02 pm

[…] Windows Azure Planning: A Post-Decision Guide to Integrate Windows Azure in Your Environment: AIS’ CTO Vishwas Lele posted a complete planning guide on how to best adopt and integrate Windows Azure into your organization. (Fleeting Thoughts) […]

November 24, 2012 at 2:48 pm

Hi, i read your blog from time to time and i own a similar

one and i was just curious if you get a lot of spam remarks?

If so how do you protect against it, any plugin or

anything you can suggest? I get so much lately it’s driving me crazy so any support is very much appreciated.

November 25, 2012 at 5:25 pm

This website was… how do you say it? Relevant!

! Finally I’ve found something that helped me. Cheers!

January 9, 2013 at 11:35 am

I speculate how come you named this specific blog, “Windows Azure Planning – A Post-decision Guide

to Integrate Windows Azure in Your Environment

� Fleeting Thoughts”. In either case I actually appreciated the article!

Many thanks,Yukiko

April 27, 2013 at 12:47 pm

Your style is unique in comparison to other folks I have read stuff from.

I appreciate you for posting when you have the opportunity,

Guess I’ll just bookmark this page.

May 12, 2013 at 10:46 pm

Heya i’m for the primary time here. I found this board and I find It really helpful & it helped me out a lot. I am hoping to present something back and help others like you aided me.

May 19, 2013 at 2:26 am

Good information. Lucky me I came across your blog by chance (stumbleupon).

I’ve saved it for later!

May 21, 2013 at 9:59 am

Great article. I will be experiencing some of these issues as well.

.

May 26, 2013 at 12:40 pm

I’m truly enjoying the design and layout of your website. It’s

a very easy on the eyes which makes it much more pleasant for me to come

here and visit more often. Did you hire out a designer to create your theme?

Exceptional work!

June 6, 2013 at 4:15 am

Great info. Lucky me I came across your website by chance (stumbleupon).

I have book-marked it for later!

June 26, 2013 at 11:19 pm

Hi there, after reading this remarkable article i am also happy to share my knowledge here with mates.

July 8, 2013 at 8:59 am

Asking questions are truly pleasant thing if

you are not understanding anything totally, but this piece of writing gives pleasant understanding yet.

August 15, 2013 at 5:35 pm

WOW just what I was looking for. Came here by searching for equipo contra incendio