What are cloud native technologies (#kubernetes, #prometheus etc.) and why should I care?

November 25, 2018

PaaS and Cloud Native

If you have worked with Azure for a while**, you are likely very familiar with IaaS, PaaS and SaaS models. You are also familiar with the main benefit of PaaS over IaaS – the ability to have the cloud provider manage the underlying storage and compute infrastructure (so you don’t have to worry about things like patching, hardware failures and capacity management). The other important benefit of PaaS is the rich ecosystem of value-add services (such as database / identity / monitoring as a service), that can help reduce the time to market.

So if PaaS is so cool, why are Cloud Native technologies like Kubernetes and Prometheus all the rage these days? In fact, not just Kubernetes and Prometheus, there is a ground swell of related cloud native projects. Just visit the cloud native landscape to see for yourself – https://landscape.cncf.io/

Key benefits of Cloud Native architecture

Here are ten reasons why cloud native is getting so much attention:

- Application as a first-class construct – Rather than speaking in terms of VMs, storage, firewall rules etc. cloud native is about application specific constructs. Whether it is a Helm chart that defines the blueprint of your application, or a service mesh configuration that defines the network in application specific terms.

- Portability – Applications can run on any CNCF certified clouds and on-premises and edge devices. The API surface is exactly the same.

- Cost efficient – by densely packing the application components (containers) on the underlying cluster, the cost of running an application is significantly more efficient.

- Extensibility model – Standards based extensibility model allows you to tap into innovations offered by the cloud provider of your choice. For instance, using the service catalog and open service broker for Azure, you can package a Kubernetes application with a service like Cosmos DB.

- Language agnostic – cloud native can support a wide variety of languages and frameworks including .NET, Java, Node etc.

- Scale your ops teams – Because the underlying infrastructure is decoupled from the applications, there is greater consistency in the lower levels of your infrastructure. This allows your ops team to scale much more efficiently.

- Consistent Resource Model – In addition to greater consistency at the lower levels of infrastructure, applications developers are exposed to a consistent set of constructs for deploying their applications. For example: Pod, Service and Job. These constructs remain the same across cloud, on-premises and edge environments.

- Declarative approach – Kubernetes, Istio and other projects are based on a declarative, configuration-based model that support self-healing. This means that any deviation from the “desired state” is automatically “healed” by the underlying system. Declarative models reduce the need for imperative automation scripts that can be expensive to develop and maintain.

- Community momentum – As stated earlier, the community momentum behind CNCF is unprecedented. Kubernetes is the #1 open source project in terms of contributions. In addition to Kubernetes and Prometheus, there are close to 5000 projects that have collectively attracted over $4 billion of venture funding! In the latest survey, (August 2018), the use of cloud native technologies in production has gone up by 200% since Dec 2017.

- Ticket to DevOps 2.0 – Cloud native combines the well-recognized benefits of what is being termed as “DevOps 2.0” that combines hermetically sealed and immutable container images, microservices and continuous deployment.

Azure and CNCF

Fortunately, Microsoft has been a strong supporter of CNCF (it joined CNCF back in 2017 as a platinum member). Since then it has made significant investments in a CNCF compliant offering in the form of Azure Kubernetes Service (AKS). AKS combines the aforementioned benefits of cloud native computing with a fully managed offering – think of AKS as a PaaS that is also CNCF compliant.

Additionally, AKS addresses enterprise requirements like compliance standards, integration with capabilities like Azure AD, Key Vault, Azure Files etc. Finally, offerings like Azure Dev Spaces and Azure DevOps greatly enhance the CI / CD experience in working with cloud native applications. I will be remiss not to talk about VS Code extension for Kubernetes that also brings a useful tooling to the mix.

** if you are just getting started with Azure, I will submit that Cloud Native is the place to start.

Cloud Architect Master Class

May 5, 2018

The cloud debate is over and there is no doubt that the public cloud is going to be the backbone for enterprise IT. But are we ready to make this shift? Do we have the trained staff, especially Cloud Solution Architects (CSAs), to help us make this transition? CSAs are key to any company’s cloud transformation objectives – they are responsible for defining cloud adoptions plans, setting up cloud application guidelines, management and monitoring strategies. In addition, CSAs are also expected to provide hands-on technical leadership. A cursory search on Glassdoor, at the time of writing, brings back 7000+ open CSA positions just in the US.

This 3-day immersive training will be conducted by Vishwas Lele, a five-time Azure MVP and Microsoft Regional Director. Vishwas has been working with cloud technologies since 2007 and has helped dozens of commercial and private-sector companies adopt the cloud. Since 2007, Vishwas has trained thousands of developers through his writings, presentations in conferences and user groups and sessions in Pluralsight and Channel 9 content.

This Azure CSA Master class is custom designed to help you take your existing architecture and design skills to the realm of cloud computing. We will quickly move beyond topics like “What is an App Service? or How to a setup highly available VM?” etc. and discuss real world guidance, best practices and constraints associated with using these services. Attendees are encouraged to watch Azure Essentials in preparation for the course, allowing us to move past the fundamentals quickly.

This class is filled with demos and code to help you develop a deep understanding of key concepts. Since we have such a wide range of topics to cover, we will not have time for hands-on labs during the regular class hours. Instead attendees are expected to fork the code repo created for the class and experiment on their own.

The ultimate goal of this class is to engender a cloud thinking mindset. Cloud thinking is a mindset that goes beyond moving an existing application to the cloud or building a new application using cloud native services. Cloud thinking is a solution-focused approach to building applications that maximize the benefits that the cloud has to offer.

Don’t miss out on this unique opportunity to attend this class, extend your skills and gain from Vishwas’ experience from numerous cloud projects and insights into cloud application and patterns. Vishwas is planning to take time away from his project work to offer this class twice a year (this class is being presented by Vishwas in a personal capacity, not by his employer AIS).

Target Audience

Any architect, project lead or senior developer would benefit greatly from the class.

Duration

Three very intense days.

Upcoming Classes

- Summer, 2018 June 18-20, 2018 Reston VA

Contact AzureMasterClass@Mayusha.com for more information or register here

Why Cloud / Why Azure?

- The “big switch”

- Review of commercial clouds

- Azure platform evolution

- Azure differentiators

What is Azure CSA?

- The role of the Azure CSA

- People and process changes

- Cloud Oriented Programming

- Cost Economics

IaaS Essentials

- Software defined everything

- Compute – VM, Scale Set, ACI, Batch

- Networking – VNET / NSG / UDR / Express Route

- Storage – Blobs / premium / file service / cool storage

PaaS Essentials

- Cloud Services – Web and worker roles

- App Service (Web, Mobile)

- Service Fabric – Windows and Linux

- Container Service –AKS & ACI

- Functions – Serverless

Security Essentials

- Security Center

- Compliance

- Security Controls

- Key Vault

- Auditing and Logging

DevOps Essentials

- CI & CD Toolchain (TFS and Jenkins)

- ARM

- PowerShell CLI

- Load Testing Monitor

- OMS

- AppInsights

- Service Catalog

Identity Essentials

- Azure AD – B2B, B2C

- Federation

- Azure AD Connect

- Privileged identity management (MFA, timed and conditional access)

Data Essentials

- Azure SQL Database

- Cosmos DB

- Azure Data Bricks

- Azure ML

Group Discussion / Call to Action

- Lift-n-shift & cloud native

- Cloud adoption strategies

- Pitfalls and lessons learnt

- Staying current with the Cloud cadence

Why #PaaS adoption will continue to grow?

January 16, 2017

David Linthicum in his blog post argues that PaaS adoption is not where it was initially predicted to be, and now it may be too late for PaaS. I respectfully disagree. Here is why:

- I have previously written about the benefits PaaS – Features like OS patching, auto healing from hardware and software failures and ability to leverage PaaS building blocks (database as service, cache as service, identity as a service) continue to be relevant.

Customers are finding that a pure IaaS based lift-n-shift to the cloud is a step in the right direction, but in the end, many of the core problems experienced on-premises, remain unresolved. Which is why customers are looking to undertake “just enough” refactoring during a lift-n-shift by moving from a pure compute / VMs to a Cloud Services approach.

- David alludes to strong tools IaaS from AWS and Azure grabbing all the attention. In fact, IaaS tools are moving towards PaaS. One of the most important IaaS tool on Azure is VM Scale Sets – Provide it single VM image as input and you can get a auto-scaled cluster.

- David talks about developers being confined in a PaaS sandbox. He cites Heroku and Oracle cloud as examples. Once again PaaS platforms have quickly evolved to offer a wide array of choices. Consider one of the popular PaaS service on Azure – Azure App Service – it offers first class support for ASP.NET, Node, Java, PHP, Python (even PowerShell). You can even bring your own Docker image to App Service.

- David talks about IaaS as a better fit for DevOps (versus PaaS). Once again, PaaS platform like App Service already have built-in DevOps toolchain hooks for CI/ CD, deployment slots, monitoring, testing in production. App Service can be deployed to a customer’s cluster allowing customers to marry PaaS with hybrid / on-premises. Furthermore, PaaS service like Azure Service Fabric can be deployed in Azure, on-premises or even in AWS.

- PaaS is going to be even more important as cloud usage bill continues to rise. Organizations are realizing that they are typically using a very small percentage of the CPU capacity that they are paying for. This is where high density deployment offered by PaaS comes in.

- Finally, popularity of Docker is bringing renewed focus on PaaS – I recently wrote about “Dockerization of Azure PaaS”

So while it has taken longer than expected, the popularity of PaaS is rising and not waning, IMHO.

Sorry I have been delinquent in posting meetings summaries from our Azure Gov Meetup Here is quick description of our last three meetings.

But first, we have new venue! We are now meeting 1776 DC – a global business incubator or startups – a great setting for our meetup. If you not been to 1776, it is definitely something worth checking out.

Our 7th meeting was about #opensource in the government. The main presenters were Evong Nham Chung and Adam Clater from Red Hat. Adam and Evong talked about Microsoft and Red Hat partnership, as well as, the availability of Red Hat OpenShift in the Azure marketplace. The main presentation was followed by Brian Harrison, Cloud Solution Architect, Microsoft. Brian presented an overview of Microsoft’s open source initiatives.

Our 8th meeting was kicked off by Andrew Weiss, Federal Solutions Engineer, Docker; and WillKinard, CTO, Boxboat with a discussion on modernizing applications with Docker and Azure. Next, Michael S. Hansen, Principal Investigator, National Heart, Lung, and Blood Institute, NIH, presented a very interesting use case with the "Gadetron" which is using containers for image processing workloads in the cloud. Finally, John Morello, CTO, Twistlock, a Docker partner and open source contributor presented their solution around container security.

Our 9th meeting was focused on #IOT. Jeff King, Senior Solutions Architect, Microsoft and Brandon Rohrer, Cloud Solution Architect, Microsoft presented a very interesting case study on how sensor (IoT) devices are providing new crime-fighting tools and real-time situational awareness for law enforcement. They also talked about in-car and body-worn camera systems, drones and aerial surveillance vehicles that re using the cloud to provide officers with a real-time, connected view of other first responders around them, and real-time situational awareness for officers. Following the main presentation, Yared Tsegaye, Senior Software Engineer, AIS talked about a solution developed for a large machine-tooling company aiming to re-platform their existing machine optimization software solution to be lower-cost, yet more scalable and flexible. Technologies that made up the solution included Azure IOT Hub, Language R, Azure ML and Power BI. Next, Stephen Bates, Director, Advanced Analytics, OSIsoft provided a summary of federal use cases, ramp to Azure, and overview on OSIsoft as a Microsoft partner. Finally, Ryan Socal, Senior Program Manager, Azure Government, Microsoft provided a preview of the one-stop documentation resource on Azure Government.

As can see from the above meeting descriptions – a host of very interesting topics and conversations. Please register here to get updates about upcoming meetings – https://www.meetup.com/DCAzureGov/. Hope to see you at the next meeting!

The sixth #AzureGov meetup took place on July 25th at Microsoft’s K Street office.

Like the previous meetups, this meetup also began with a networking session.

After the networking session Andrew Stroup, Director of Product and Technology, White House Presidential Innovation Fellows, provided insights and discussion on how the federal government is tackling the procurement of cloud technologies by building a double-sided marketplace, Apps.gov.

Apps.gov is focused on creating easier pathways for tech companies to enter the federal government market and federal employees a singular place to discover, explore and procure these products. Andrew took several questions about how products are registered with Apps.gov, the market size and how cloud solutions like AzureGov are available on Apps.gov.

Following Andrew’s session, Martin Heinrich, Director of Enterprise Content and Records Management, CGI, discussed CGI’s recently-launched Records Management as a Service (RMaaS) which combines the Microsoft Azure Government Cloud and OpenText’s Content Suite with CGI’s extensive consulting expertise and implementation services.

Hope you can join us for the next meeting on August 31st that will feature Open Source in government. For more information, please visit

5th #AzureGov Meetup – How to get an App ATO, L4 certification update, P-ATO, PowerBI Embedded

July 9, 2016

The fifth #AzureGov meetup took place on June 29th at Microsoft’s K Street office.

Like the previous meetups, this meetup also began with a networking session. A hot topic of conversation amongst the attendees was the recent announcement about AzureGov achieving high impact provisional authority (P-ATO) – highest impact level for FedRAMP accreditation. In other words, AzureGov can now host high-impact level data and applications. This was seen by many as a turning point in AzureGov adoption by federal and state agencies.

After the networking session, Nate Johnson, Senior Program Manager in the Azure Security Group, presented an insightful session on how apps can achieve ATO. He talked about the six step process for apps to achieve ATO.

Nate also talked about how AzureGov team can help customers in achieving an ATO for their apps including Azure SME support, customer responsibility matrix, security documentation, blueprints and templates.

The next session was presented by Aaron Barth. Principal PFE, Microsoft Services. Aaron’s session built on the FedRAMP process outlined by Nate earlier. Aaron provided a “practitioners perspective” based on his recent experience in going through ATO process for a client of his. As a developer himself, Aaron was able to demystify, what appears to be an onerous process of documenting every security control in the application. He explained that by building on a FedRAMP compliant platform, a large chunk of the documentation requirements were addressed by the cloud provider (AzureGov).

The following slide (also from Aaron’’s deck) was very helpful in depicting i) how the various security controls maps to different tiers of the application ii) how the number of ATO controls that you are responsible for (as an app owner), goes down significantly as you move from on-premises, IaaS and PaaS.

The next presentation was from Brett Goldsmith of AIS. Brett presented a brief demonstration of how his team, using the FBI UCR dataset, built a nice looking visualizations using Power BI Embedded.

Finally, I tried to answer a question that has come up in previous meetups – “How can I leverage rich ARM templates in an AzureGov setting?” As you know, not all services (including Azure RM providers) are available in AzureGov today. So here is my brute force approach for working around this *temporary* limitation ( disclaimer – this is not an “official” workaround by any means, so please conduct your due diligence regarding licensing etc.)

In a nutshell – provision resources in Azure using ARM (for example the sqlvm-always-on), glean the metadata from the provisioned resources (AV Set, ILBs, Storage), copy the images to Azure Gov, use the metadata gleaned from the previous step to provision resources in AzureGov using ASM.

That said, hopefully we will not need to use the aforementioned workaround for long. New ARM Resources Providers are being added to AzureGov at a fairly rapid clip. In fact, I just ran a console program to dump all resource providers. The output is pasted below. Notice the addition of providers such as Storage V2.

Provider Namespace Microsoft.Backup

ResourceTypes:

BackupVault

Provider Namespace Microsoft.ClassicCompute

ResourceTypes:

domainNames

checkDomainNameAvailability

domainNames/slots

domainNames/slots/roles

virtualMachines

capabilities

quotas

operations

resourceTypes

moveSubscriptionResources

operationStatuses

Provider Namespace Microsoft.ClassicNetwork

ResourceTypes:

virtualNetworks

reservedIps

quotas

gatewaySupportedDevices

operations

networkSecurityGroups

securityRules

capabilities

Provider Namespace Microsoft.ClassicStorage

ResourceTypes:

storageAccounts

quotas

checkStorageAccountAvailability

capabilities

disks

images

osImages

operations

Provider Namespace Microsoft.SiteRecovery

ResourceTypes:

SiteRecoveryVault

Provider Namespace Microsoft.Web

ResourceTypes:

sites/extensions

sites/slots/extensions

sites/instances

sites/slots/instances

sites/instances/extensions

sites/slots/instances/extensions

publishingUsers

ishostnameavailable

sourceControls

availableStacks

listSitesAssignedToHostName

sites/hostNameBindings

sites/slots/hostNameBindings

operations

certificates

serverFarms

sites

sites/slots

runtimes

georegions

sites/premieraddons

hostingEnvironments

hostingEnvironments/multiRolePools

hostingEnvironments/workerPools

hostingEnvironments/multiRolePools/instances

hostingEnvironments/workerPools/instances

deploymentLocations

ishostingenvironmentnameavailable

checkNameAvailability

Provider Namespace Microsoft.Authorization

ResourceTypes:

roleAssignments

roleDefinitions

classicAdministrators

permissions

locks

operations

policyDefinitions

policyAssignments

providerOperations

Provider Namespace Microsoft.Cache

ResourceTypes:

Redis

locations

locations/operationResults

checkNameAvailability

operations

Provider Namespace Microsoft.EventHub

ResourceTypes:

namespaces

checkNamespaceAvailability

operations

Provider Namespace Microsoft.Features

ResourceTypes:

features

providers

Provider Namespace microsoft.insights

ResourceTypes:

logprofiles

alertrules

autoscalesettings

eventtypes

eventCategories

locations

locations/operationResults

operations

diagnosticSettings

metricDefinitions

logDefinitions

Provider Namespace Microsoft.KeyVault

ResourceTypes:

vaults

vaults/secrets

operations

Provider Namespace Microsoft.Resources

ResourceTypes:

tenants

providers

checkresourcename

resources

subscriptions

subscriptions/resources

subscriptions/providers

subscriptions/operationresults

resourceGroups

subscriptions/resourceGroups

subscriptions/resourcegroups/resources

subscriptions/locations

subscriptions/tagnames

subscriptions/tagNames/tagValues

deployments

deployments/operations

operations

Provider Namespace Microsoft.Scheduler

ResourceTypes:

jobcollections

operations operationResults

Provider Namespace Microsoft.ServiceBus

ResourceTypes:

namespaces

checkNamespaceAvailability

premiumMessagingRegions

operations

Provider Namespace Microsoft.Storage

ResourceTypes:

storageAccounts

operations

usages

checkNameAvailability

Here is the code to print all resource providers available in AzureGov

static void Main(string[] args)

{

var token = GetAuthorizationHeader();

var credentials = new Microsoft.Rest.TokenCredentials(token);

var resourceManagerClient = new Microsoft.Azure.Management.Resources.ResourceManagementClient(new Uri(AzureResourceManagerEndpoint), credentials)

{

SubscriptionId = SubscriptionId,

};

foreach (var provider in resourceManagerClient.Providers.List())

{

Console.WriteLine(String.Format("Provider Namespace {0}", provider.NamespaceProperty));

Console.WriteLine("ResourceTypes:");

foreach (var resourceType in provider.ResourceTypes)

{

Console.WriteLine(String.Format("\t {0}", resourceType.ResourceType));

}

Console.WriteLine("");

}

The fourth #AzureGov meetup took place on May 24th at Microsoft’s K Street office.

After a short networking session, Jack Bienko, Deputy for Entrepreneurship Education, US Small Business Administration talked about the upcoming National Day of Civic Hacking on June 4th. You can find more information about the event on Jack’s recent blog post.

Next. Bill Meskill, Director of Enterprise Information Services, Office of the Under Secretary of Defense for Policy, kicked off a case study presentation of a Intelligent News Aggregator and Recommendation solution that his group has developed on Azure. He started out by describing the motivations for building this solution, a summary of business objectives and why it made sense to develop it on the cloud.

Next Brent Wodikca and Jim Strang from AIS presented an overview of the architecture, followed by, a brief demo. At a high-level, the system relies on a Azure WebJobs to pull news feeds from a number of sources. The downloaded news stories are then used to train an Azure Machine Learning model - LDA implementation of the Topic Model. The trained model is then made available as a web service to serve recommendations based on users’ preference. There is an also an API Management facade that allows their on-premises systems ( SharePoint) to talk to the Azure hosted solution. Azure API Management allows security policies such as enforcing a JWT policy and restricting the incoming IP addresses is used to secure the connectivity between on-premises and Azure ML based solution.

Finally Andrew Weiss, Azure Solution Architect, Microsoft briefly talked about leveraging Azure Services to help solve hackathon challenges.

This guest post is from Brent Wodicka at Applied Information Sciences.

The third #AzureGov meetup was held at the Microsoft Technology Center in Reston on April 27th. This meetup was again well attended and featured three great presentations relating to next-gen cloud applications for government and micro-services.

Mehul Shah got the session started with an overview of the Cortana Intelligence Suite, including the latest announcements coming out of BUILD 2016. The talk started with a lap around the platform, and then focused in on some key areas where features are rolling out quickly – including the Cognitive Services APIs. The session included some good discussion from the group related to potential use cases, and the ability to adapt the services to any number of business verticals. More about the Cortana Intelligence Suite here, and Build 2016 here.

The second session focused on building microservices on Azure. Chandra Sekar lead the discussion that began with a general discussion about the options you have for building microservices on the platform, including container technologies and the Azure Service Fabric. Chandra then went into detail and showed a great demo of a sample microservice built on the Azure Service Fabric. The demo explained how stateful micrososervices can be built using the Service Fabric, and demonstrated the resiliency of this model by walking through a simulated “failure” of the primary service node and recovery of the service – which occurred very quickly and maintained its running state. Cool stuff!

The final speaker was Keith McClellan, a Federal Programs Technical lead with Mesosphere. Keith began by talking about what Mesosphere has been up to, its maturity in the market, and the recent announcement of the DC/OS project. DC/OS is an exciting open source technology (spearheaded by Mesosphere) which offers an enterprise grade data-center scale operating system – meant to be a single location for running workloads of almost any kind. Keith walked through provisioning containers and other interesting services (including a SPARK data cluster for big data analysis) on the platform – and actually provisioned the entire stack on Azure infrastructure. I was impressed with the number of services already available to run on the platform today. More about DC/OS here.

If you haven’t already, join the DC Azure Government meetup group here, and join us for the next meeting. You can also opt to be notified about upcoming events or when group members post content.

The second #AzureGov meetup was held in Reston on March 15th. It was well attended ( see picture below of Todd presenting to the audience. Standing next to Todd is Karina Homme – Principal Program Manager for AzureGov and co-organizer of the meetup )

This meetup was kicked off by Todd Neison from NOAA. Todd gave an excellent presentation on his group’s journey into #Azure. Starting with the decision to move to the cloud, challenges in getting the necessary approvals and focusing on #PaaS. Todd’s perspective on adopting the cloud as a federal government employee can be very valuable to anyone looking to move to the cloud.

The next segment was presented by Matt Rathbun. Matt reviewed the recent news on #AzureGov compliance including i) Microsoft cloud for the government has been invited to participate in a pilot for High Impact Baseline and expects to get a provisional ATO by the end of the month ii) Microsoft has also finalized the requirements to meet DISA Impact Level 4 iii) Microsoft is establishing two new physically isolated Azure Government regions for DoD and DISA Impact Level 5.

You can read more about the announcements here. What stood out for me was the idea of fast track compliance that is designed to speed up the compliance from the current annual certification cadence.

The final segment was presented by the Redhat team and Andrew Weiss from Microsoft. They demonstrated the #OpenShift PaaS offering running on Azure. OpenShift is a container based application development platform that can be deployed to Azure or on-premises. As part of the presentation, they took an ASP.NET core 1.0 based web application and hosted it on the OpenShift platform. Next they scaled up the web application using Openshift and underlying Kubernestis container cluster manager.

Hope you can join us for the next meeting. Please register with the meetup here to be notified of the upcoming meetings.

#AzureGov Meetup & Support for ARM

February 29, 2016

Thanks for those who joined us for inaugural #AzureGov meetup on Feb 22nd. For those who could not join here is a quick update.

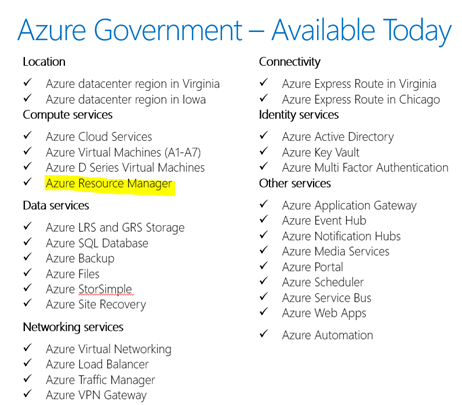

We talked about the services that are available in AzureGov today ( see picture below).

Note that Azure Resource Manager (ARM) is now available in AzureGov. Why am I singling out ARM from the list above? Mainly because most of the services that are available today such as Azure Cloud Services, Azure Virtual Machines, Azure Storage are based on the classic model (also known as the Service Management Model) ARM is the “new way” to deploy and manage the services that make up your application in Azure. The differences between the ARM and the classic model are described here. Further note that the availability of ARM is only the first step. What we also need are the Resource Managers (RM)for various services like Compute and Storage V2 ( ARM in turn calls the necessary RM to provision a service).

Since I could not find sample code to call ARM in AzureGov, here is a code snippet that you may find handy.

using System ;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using Microsoft.IdentityModel.Clients.ActiveDirectory;

using System.Threading;

using Microsoft.Azure.Management.Resources.Models;

using Microsoft.Azure.Management.Resources;

using Microsoft.Rest;

using Microsoft.Rest.Azure;

namespace AzureGovDemo

{

class Program

{

# Set well-known client ID for Azure PowerShell

private static string ClientId = "1950a258-227b-4e31-a9cf-717495945fc2";

private static string TenantId = "XXX.onmicrosoft.com";

private static string LoginEndpoint = "https://login.microsoftonline.com/";

private static string ServiceManagementApiEndpoint = "https://management.core.usgovcloudapi.net/";

private static string RedirectUri = "urn:ietf:wg:oauth:2.0:oob";

private static string SubscriptionId = "XXXXXXXX;

private static string AzureResourceManagerEndpoint = "https://management.usgovcloudapi.net";

static void Main(string[] args)

{

var token = GetAuthorizationHeader();

var credentials = new Microsoft.Rest.TokenCredentials(token);

var resourceManagerClient = new Microsoft.Azure.Management.Resources.ResourceManagementClient(new Uri(AzureResourceManagerEndpoint), credentials)

{

SubscriptionId = SubscriptionId,

};

Console.WriteLine("Listing resource groups. Please wait….");

Console.WriteLine("—————————————-");

var resourceGroups = resourceManagerClient.ResourceGroups.List();

foreach (var resourceGroup in resourceGroups)

{

Console.WriteLine("Resource Group Name: " + resourceGroup.Name);

Console.WriteLine("Resource Group Id: " + resourceGroup.Id);

Console.WriteLine("Resource Group Location: " + resourceGroup.Location);

Console.WriteLine("—————————————-");

}

Console.WriteLine("Press any key to terminate the application");

Console.ReadLine();

}

private static string GetAuthorizationHeader()

{

AuthenticationResult result = null;

var context = new AuthenticationContext(LoginEndpoint + TenantId);

var thread = new Thread(() =>

{

result = context.AcquireToken(

ServiceManagementApiEndpoint,

ClientId,

new Uri(RedirectUri));

});

thread.SetApartmentState(ApartmentState.STA);

thread.Name = "AquireTokenThread";

thread.Start();

thread.Join();

if (result == null)

{

throw new InvalidOperationException("Failed to obtain the JWT token");

}

string token = result.AccessToken;

return token;

}

}

}

Hope this helps and I do hope you will register for the #AzureGov meetup here – http://www.meetup.com/DCAzureGov/

Thanks to my friend Gaurav Mantri, fellow Azure MVP and developer of Cloud Portam – www.CloudPortam.com – an excellent tool for managing Azure Services. Gaurav figured out that we need to set the well known client id for powershell.